Handling File upload in Event driven architecture

Introduction

In this tutorial, we will build a file-uploading mechanism for event-driven architecture. You may ask, “Will file uploading be the same for any kind of architecture?” Well, let me put you into a scenario.

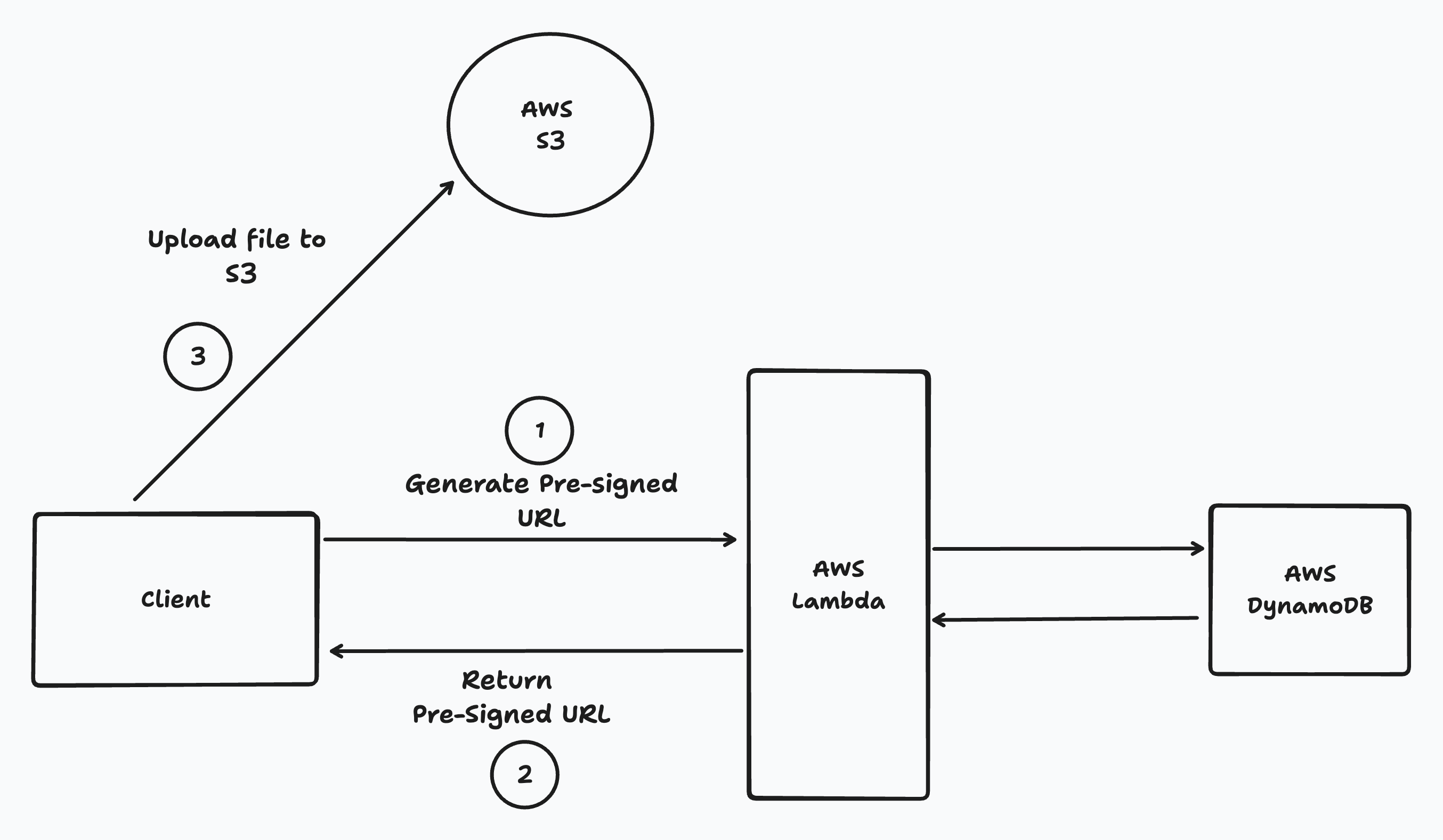

I recently worked on a project requiring me to handle file uploads using AWS S3 and AWS Lambda. Since most applications we build nowadays use a Pre-Signed URL to upload files from clients directly to cloud providers, I’ve built logic to generate a Pre-Signed URL that clients can use to upload files directly to S3.

For example, you can see the client make a post request to the backend, and the backend generates a pre-signed URL to upload files. When Lambda generates a pre-signed URL, it will create an entry in the DynamoDB table to record the file data. This approach helps prevent the client from making another API call to update the database. Finally, the client uses that URL to upload files directly to AWS S3.

The problem with the traditional approach

Everything went well until the file upload failed from the client side. Handling the file upload from the client side has two parts.

- Make an API call to generate a Pre-Signed URL.

- Once the URL is received, upload the file to AWS S3 using the Pre-Signed URL.

However, we encountered a significant issue with this traditional approach. Despite the API response indicating a successful file upload, users were unable to download the files. This was due to a failure in the S3 upload process from the client side, resulting in an invalid entry in the database without a corresponding file in the S3 bucket.

It is a critical problem for a user who uploaded a file, since the application fails to store it because of an error.

Solution

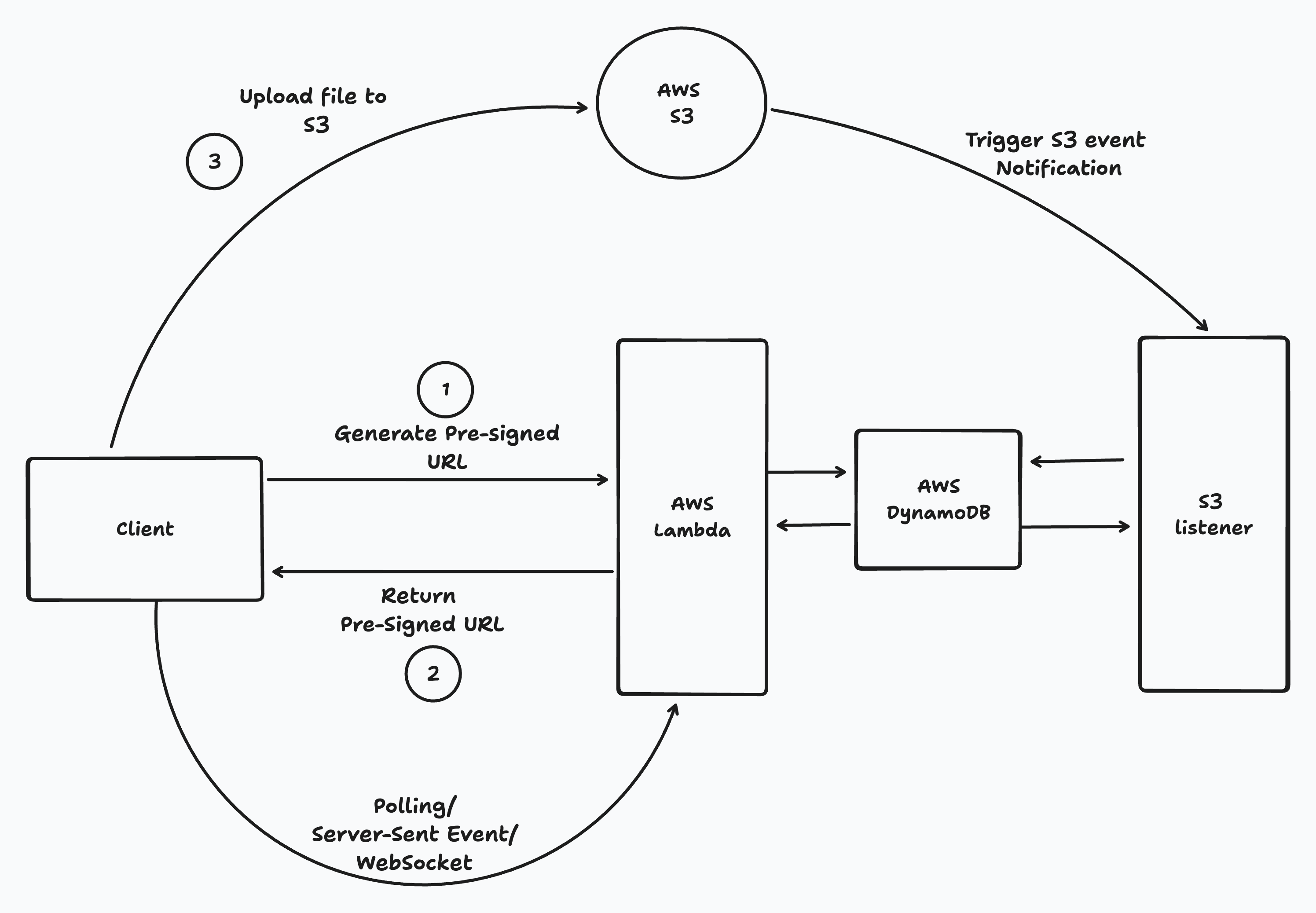

After brainstorming possible solutions to fix the problem properly, we decided to make the approach event-driven, as the application already uses event-driven architecture.

Our solution involved a slight modification to the architecture. When a client requests a Pre-Signed URL, the backend generates and sends it to the client. After the client uploads the file to S3, an S3 event notification is triggered. Our listener then consumes this event and creates an entry in DynamoDB. This ensures that there are no ‘ghost’ entries of files and maintains a clean list, thereby avoiding critical bugs in the application.

Implementation

To explain how to architect/implement the solution, let’s build a simple application using a Serverless framework accessing AWS S3, AWS Lambda services.

npm install -g serverless

Create a service using the command

serverless create --template aws-nodejs --path event-driven-file-upload

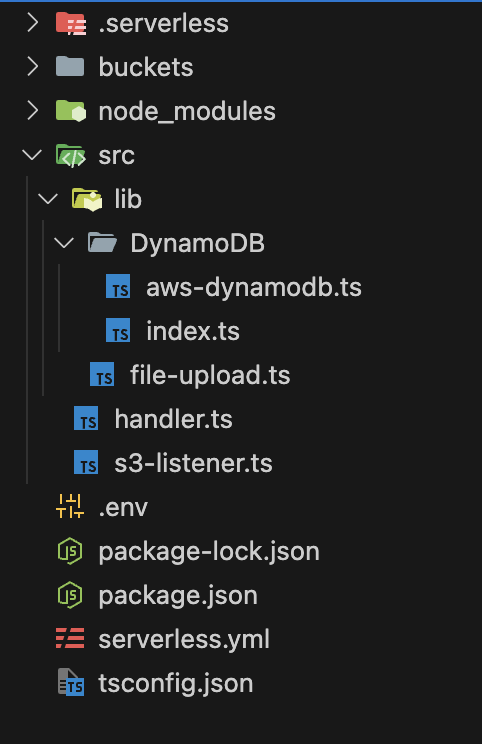

This command will create a new directory named event-driven-file-upload with the following structure:

cd event-driven-file-upload

Since we’ve already created a basic project structure installing the serverless, you can check out the code here.

The serverless framework manages all resource using serverless.yaml . Our configuration creates S3 bucket, AWS DynamoDB table, API handler and S3 event listener.

provider:

name: aws

runtime: nodejs18.x

timeout: 60

httpApi:

cors: true

- Provider Name: Specifies AWS as the cloud provider.

- Runtime: Uses Node.js 18.x, ensuring the Lambda functions run on this version.

- Timeout: Sets the maximum execution time for functions to 60 seconds.

- HTTP API CORS: Enables Cross-Origin Resource Sharing, allowing your API access from different domains.

iamRoleStatements:

- Effect: 'Allow'

Action:

- 's3:*'

- 'lambda:InvokeFunction'

- 'kms:Decrypt'

- 'dynamodb:*'

Resource: '*'

- Grants broad permissions to the Lambda functions to interact with other AWS services:

- S3: Full access to handle file storage and retrieval.

- Lambda: Permission to invoke other Lambda functions.

- KMS: Allows decryption, necessary for secure data handling.

- DynamoDB: Full access to perform database operations.

functions:

s3EventHandler:

handler: src/s3-listener.handler

events:

- s3:

bucket: file-upload-gm-bucket

event: s3:ObjectCreated:*

existing: true

httpApiHandler:

handler: src/handler.handler

events:

- httpApi: '*'

- s3EventHandler: S3 event notification triggers a Lamdba function whenever the client uploads a file to the S3 bucket. So,

s3EventHandlerhandles post-upload processing or notifications. - httpApiHandler: This handles incoming HTTP API requests and serves as the interface for users or systems to interact with the service.

resources:

Resources:

S3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: file-upload-gm-bucket

CorsConfiguration:

CorsRules:

- AllowedOrigins:

- '*'

AllowedHeaders:

- '*'

AllowedMethods:

- GET

- PUT

- POST

- DELETE

- HEAD

MaxAge: 3000

FilesTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: files

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

KeySchema:

- AttributeName: id

KeyType: HASH

AttributeDefinitions:

- AttributeName: id

AttributeType: S

- AttributeName: originalFileName

AttributeType: S

GlobalSecondaryIndexes:

- IndexName: FileNameIndex

KeySchema:

- AttributeName: originalFileName

KeyType: HASH

Projection:

ProjectionType: ALL

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

- S3Bucket: Defines an S3 bucket with CORS settings to handle web app requests. Specifies allowed origins, methods, and headers.

- FilesTable: This function creates a DynamoDB table for storing file metadata, with a secondary index on the file name for quick lookup.

plugins:

- serverless-offline

- serverless-s3-local

- serverless-plugin-typescript

- serverless-offline: Simulates AWS Lambda and API Gateway locally for development and testing.

- serverless-s3-local: Emulates S3 locally to test S3-triggered functions.

- serverless-plugin-typescript: Adds TypeScript support, allowing you to write your Lambda functions in TypeScript.

Once you have a configuration in place, Create a handler and S3 listener to handle API and S3 events.

handler.ts

import serverless from 'serverless-http';

import express, { NextFunction, Request, Response } from 'express';

import { nanoid } from 'nanoid';

import cors from 'cors';

import FileUpload from './lib/file-upload';

import DBClient from './lib/DynamoDB/aws-dynamodb';

const app = express();

app.get('/', async (req: Request, res: Response, next: NextFunction) => {

return res.status(200).json({

message: 'Hello from data!',

});

});

app.get(

'/files/:id/download',

async (req: Request, res: Response, next: NextFunction) => {

const dbClient = new DBClient();

const id = req.params.id;

if (id === undefined) {

return res.status(400).json({

error: 'Missing id',

});

}

const file = await dbClient.get('files', 'id', id);

if (file === null || !file) {

return res.status(404).json({

error: 'File not found',

});

}

const fileUpload = new FileUpload();

const url = await fileUpload.getSignedUrlForDownload(file.uniqueFileName);

return res.status(200).json({

url,

});

},

);

app.delete(

'/files/:id',

async (req: Request, res: Response, next: NextFunction) => {

const dbClient = new DBClient();

const id = req.params.id;

console.log('id', id);

if (id === undefined) {

return res.status(400).json({

error: 'Missing id',

});

}

const response = await dbClient.delete(id);

return res.status(200).json(response);

},

);

app.get('/files', async (req: Request, res: Response, next: NextFunction) => {

const dbClient = new DBClient();

const files = await dbClient.getAllFiles();

if (files === null || !files) {

return res.status(200).json([]);

}

files.sort((a: any, b: any) => {

return new Date(b.createdAt).getTime() - new Date(a.createdAt).getTime();

});

return res.status(200).json(files);

});

app.post(

'/get-url',

async (req: Request, res: Response, next: NextFunction) => {

const request = JSON.parse(req.body);

if (request.file_name === undefined) {

return res.status(400).json({

error: 'Missing file_name',

});

}

const fileName = request.file_name;

const contentType = request.content_type;

const uniqueFileName = `${nanoid()}-${fileName}`;

const fileUpload = new FileUpload();

const url = await fileUpload.getSignedUrlForUpload(

uniqueFileName,

fileName,

contentType,

'public-read',

);

return res

.header({

'Access-Control-Allow-Origin': '*',

})

.status(200)

.json({

url,

});

},

);

app.use(cors());

app.use((req: Request, res: Response, next: NextFunction) => {

return res.status(404).json({

error: 'Not Found',

});

});

export const handler = serverless(app);

Initialization and Middleware

import serverless from 'serverless-http';

import express from 'express';

import cors from 'cors';

const app = express();

app.use(cors());

This section sets up the Express.js application and integrates the CORS middleware to handle cross-origin requests, making the API accessible from different domains. The application is wrapped with serverless-http to facilitate deployment on AWS Lambda, allowing it to handle HTTP requests in a serverless environment.

app.get('/files/:id/download', async (req, res) => {

// logic to fetch files and generate download URL

});

This endpoint retrieves a specific file’s details from a DynamoDB table using the file ID provided in the URL parameter. It then uses these details to generate a signed URL for securely downloading the file from an S3 bucket.

app.delete('/files/:id', async (req, res) => {

// logic to delete a file record

});

Allows users to delete a file record from DynamoDB based on the file ID. This operation also involves logging the file ID, which can be used for debugging purposes.

app.post('/get-url', async (req, res) => {

// logic to generate a signed URL for file uploads

});

It handles POST requests to generate a pre-signed URL that clients can use to upload files directly to an S3 bucket. This setup enhances security by not exposing your server to direct file uploads.

After implementing a handler for APIs, let’s create an s3 listener to consume S3 events in lambda functions.

s3-listener.ts

import { Handler, S3Event, Context, Callback } from 'aws-lambda';

import AWS from 'aws-sdk';

import { S3Client, GetObjectCommand } from '@aws-sdk/client-s3'; // ES Modules import

import DBClient from './lib/DynamoDB/aws-dynamodb';

import { nanoid } from 'nanoid';

import fetch from 'node-fetch';

const s3 = new S3Client({ region: 'us-east-1' });

const awsS3 = new AWS.S3({

region: 'us-east-1',

});

export const handler: Handler<S3Event, Context> = (

event: S3Event,

context: Context,

callback: Callback,

) => {

console.log('Event: ', JSON.stringify(event, null, 2));

console.log('before event error', event.Records === null);

if (event.Records === null) {

console.log('event.Records === null', event.Records === null);

const error = new Error('No records found');

callback(error);

return;

}

const s3Record = event.Records[0].s3;

const bucketName = s3Record.bucket.name;

// const s3 = new AWS.S3();

const key = s3Record.object.key;

// Remove the + from the key if it's added by S3

const decodedKey = decodeURIComponent(key.replace(/\+/g, ' '));

const headObjectParams = {

Bucket: bucketName,

Key: decodedKey,

};

awsS3

.headObject(headObjectParams)

.promise()

.then(async (headObjectResponse) => {

const customMetadata = headObjectResponse.Metadata;

const originalFileName = customMetadata?.originalfilename;

const uniqueFileName = customMetadata?.uniquefilename;

const contentType = customMetadata?.contenttype;

const dbClient = new DBClient();

const insertResponse = await dbClient.create(

{

id: nanoid(),

uniqueFileName: uniqueFileName,

originalFileName: originalFileName,

contentType: contentType,

createdAt: new Date().toISOString(),

},

'files',

);

// if (insertResponse === null) {

// const error = new Error('Error inserting record');

// callback(error);

// return;

// }

})

.catch((error) => {

console.error('Error coming from headobject: ', error);

callback(error);

});

// console.log("Event: ", JSON.stringify(event, null, 2));

// callback(null, "Success");

};

Lambda Handler Function

export const handler: Handler<S3Event, Context> = (

event: S3Event,

context: Context,

callback: Callback,

) => {

console.log('Event: ', JSON.stringify(event, null, 2));

...

};

This function is triggered by an S3 event. It uses the provided event, context, and callback parameters to process the event and handle asynchronous operations.

Processing S3 records

const s3Record = event.Records[0].s3;

const bucketName = s3Record.bucket.name;

const key = s3Record.object.key;

const decodedKey = decodeURIComponent(key.replace(/\+/g, ' '));

- Extracts the bucket name and object key from the first record of the S3 event.

- Decodes the S3 object key to handle special characters that may be encoded during the upload process.

** Fetching Object Metadata and Interacting with DynamoDB **

const headObjectParams = {

Bucket: bucketName,

Key: decodedKey,

};

awsS3.headObject(headObjectParams).promise().then(async (headObjectResponse) => {

const customMetadata = headObjectResponse.Metadata;

...

const insertResponse = await dbClient.create({

id: nanoid(),

uniqueFileName: uniqueFileName,

originalFileName: originalFileName,

contentType: contentType,

createdAt: new Date().toISOString(),

}, 'files');

});

- Retrieves metadata for the S3 object using

headObject, which is important for accessing custom metadata set during the file upload. - This metadata is used to create a new record in the DynamoDB table

files. It includes the original and unique file names, content types, and creation timestamps.

Check out the complete code here

Deployment

Once you have the complete code, you can deploy API and S3 listener using

serverless deploy

It will deploy lambda functions to your AWS infrastructure and create URL to access API. We have built client side already to handle files and upload to s3.